Problem

Consider we have a Docker image from dockerhub that we know nothing about. How can we ensure that no obvious malware exists in that image, and may put at risk the usage we'll do from it?

This article is only about my view and methods. Any remark and contradiction is welcome via Twitter.

Two steps (from hell)

There are two steps in this process of "secure-reviewing" the Docker image:

Pre-review

The first step is to ensure that the image is acceptable enough to be used.

This means that we want to be sure that no malware exists in the image,

and that the image is "sane" enough to be used.

So the goal of this step is to make sure we're not installing a malware.

Post-review

The second step is to ensure that the image has no exploitable vulnerability.

Indeed, even if the image is "sane" (from the first step), we must also ensure

that no external threat actor may exploit and abuse the Docker image we'll use.

This second step is not "frozen" in time: it must be done all along the image's life cycle,

because a new CVE may be released at any moment, impacting any image that used to be considered "non-vulnerable".

So the goal of this second step is to ensure the image will not be abused along its lifecycle.

In this article, I'll focus on gathering methods for the first step. My goal is to gather here technics for reviewing Docker image and ensuring that no backdoor, malware and so on exists in that image, prior to use it.

Downloading content

In order to review the image, the first step is to download it.

But I don't want to use a docker pull repo/name:tag command

because I don't want to risk crushing my Docker daemon locally.

So I want to download the docker layers instead.

Roughly said, Docker layers (as detailed in the doc) are similar to "diff" of the files in the "virtual Docker OS" for each construction step.

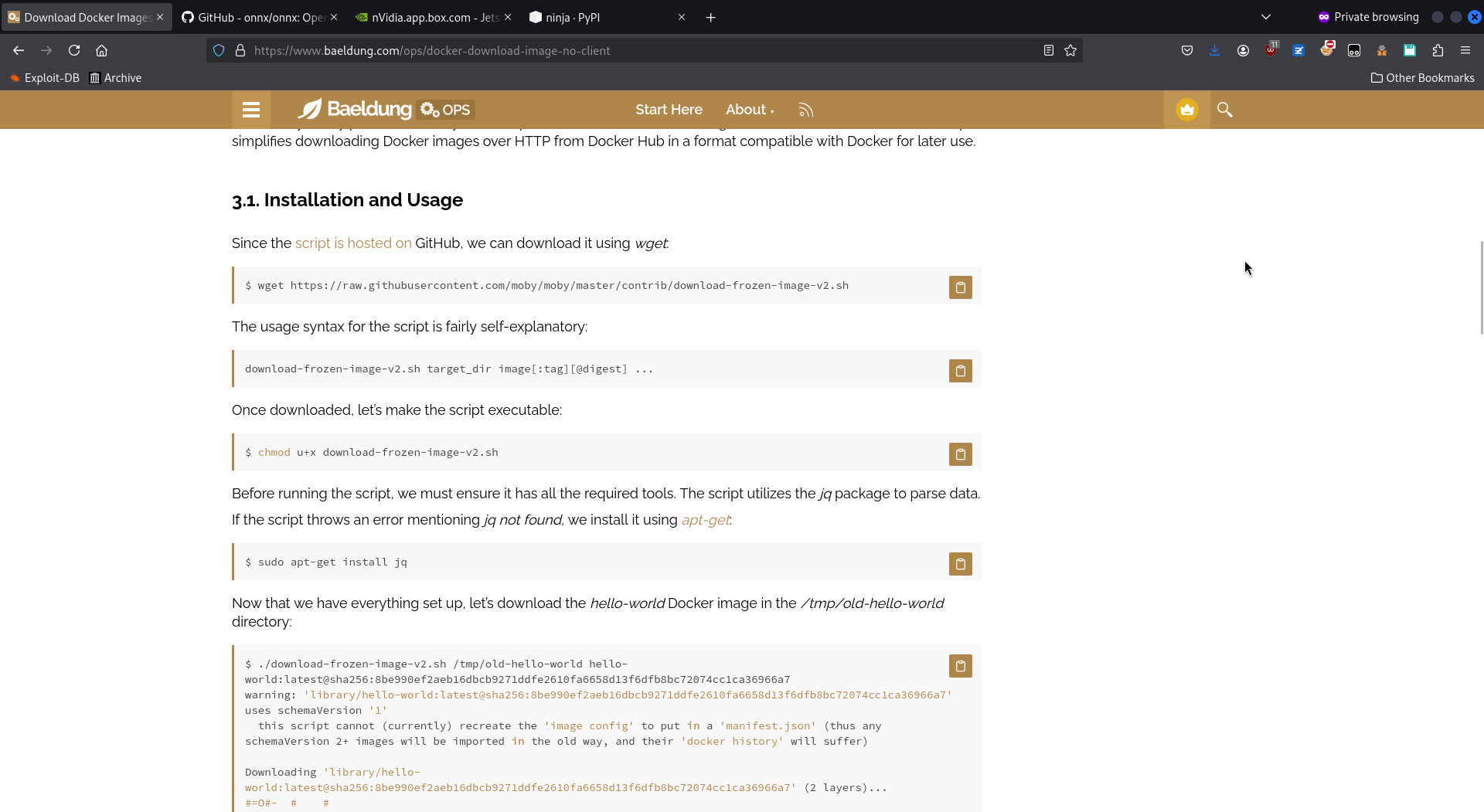

I've also tried the skopeo package from there, but it wasn't working as expected. Same for the docker_pull.py script, which was not very usable. This download-frozen-image script is better.

Each layer directory contains a "layer.tar" file that you must extract in order to access the actual files content.

A command to do so may be for i in ./*/*.tar; do echo "$i"; done.

Review

Now that we have the layers and have extracted their files, we must review their content. We can use any classic forensic.

Review the commands

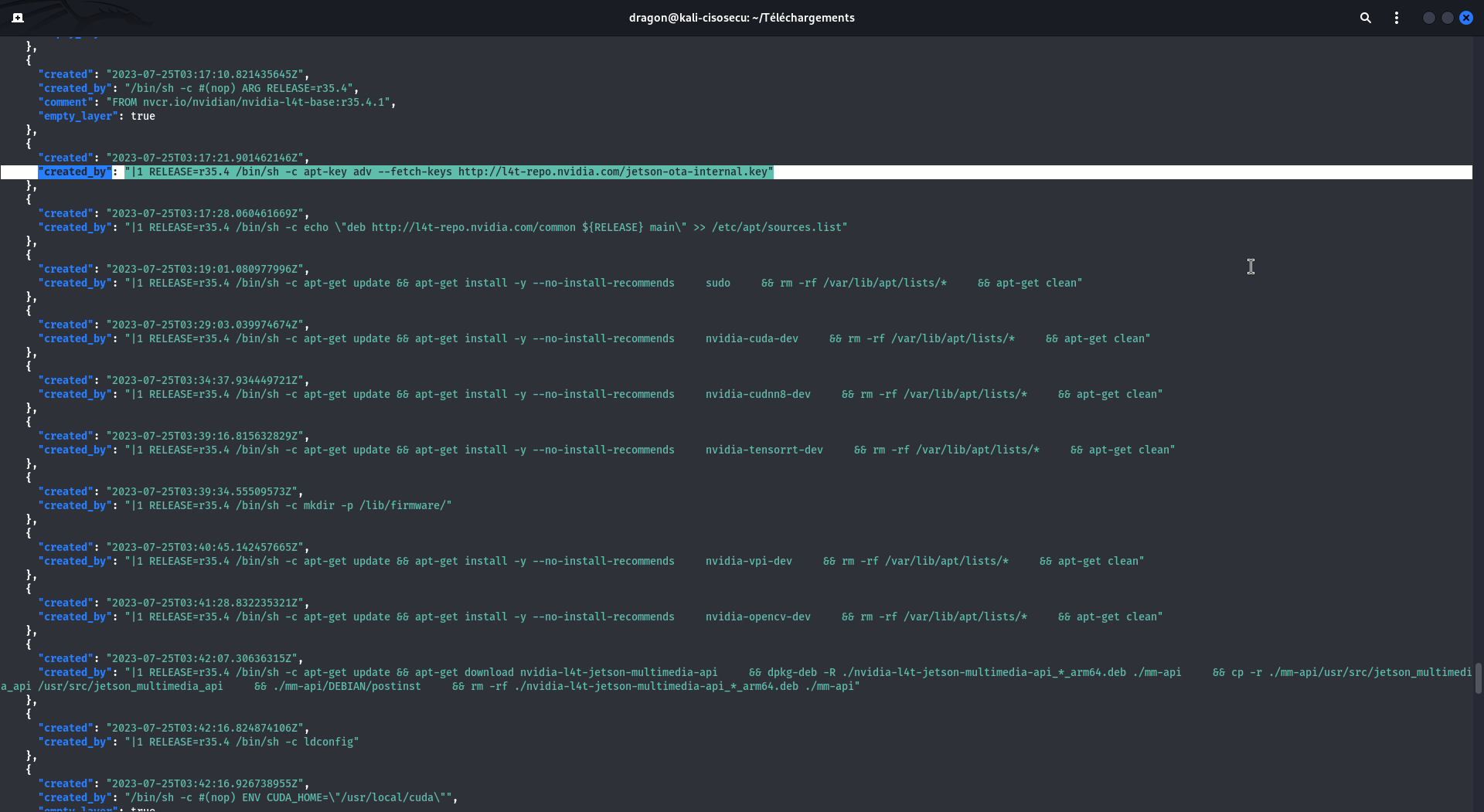

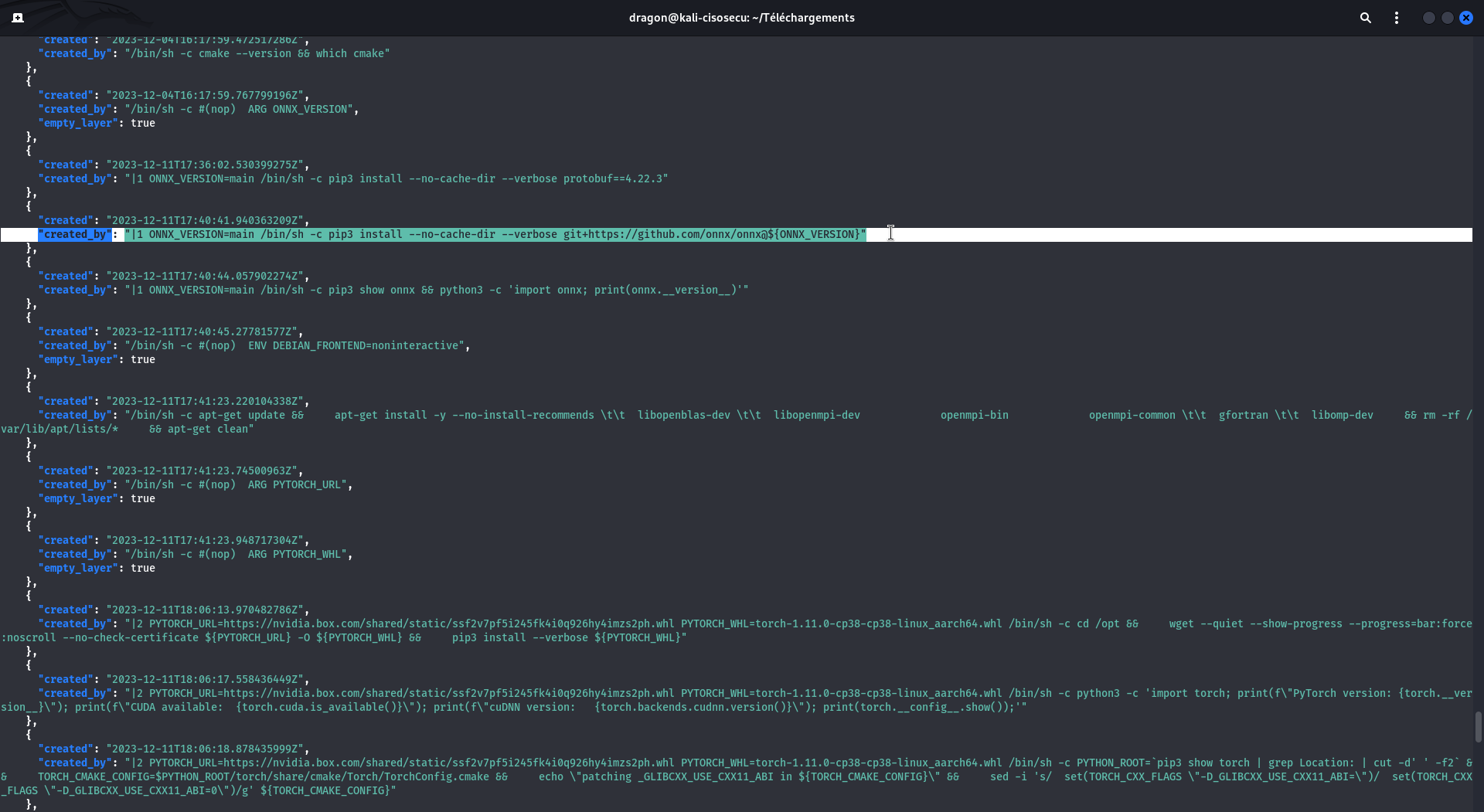

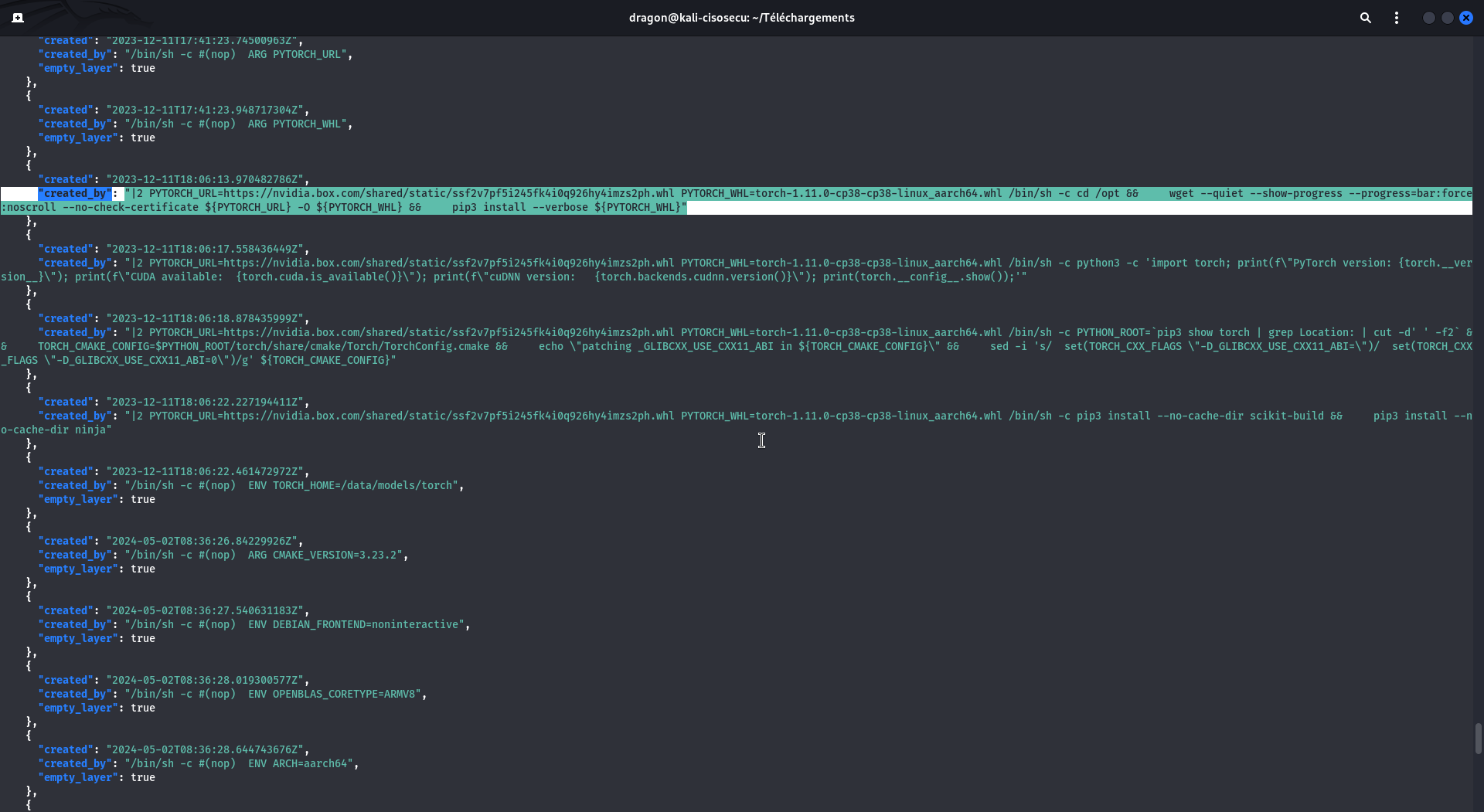

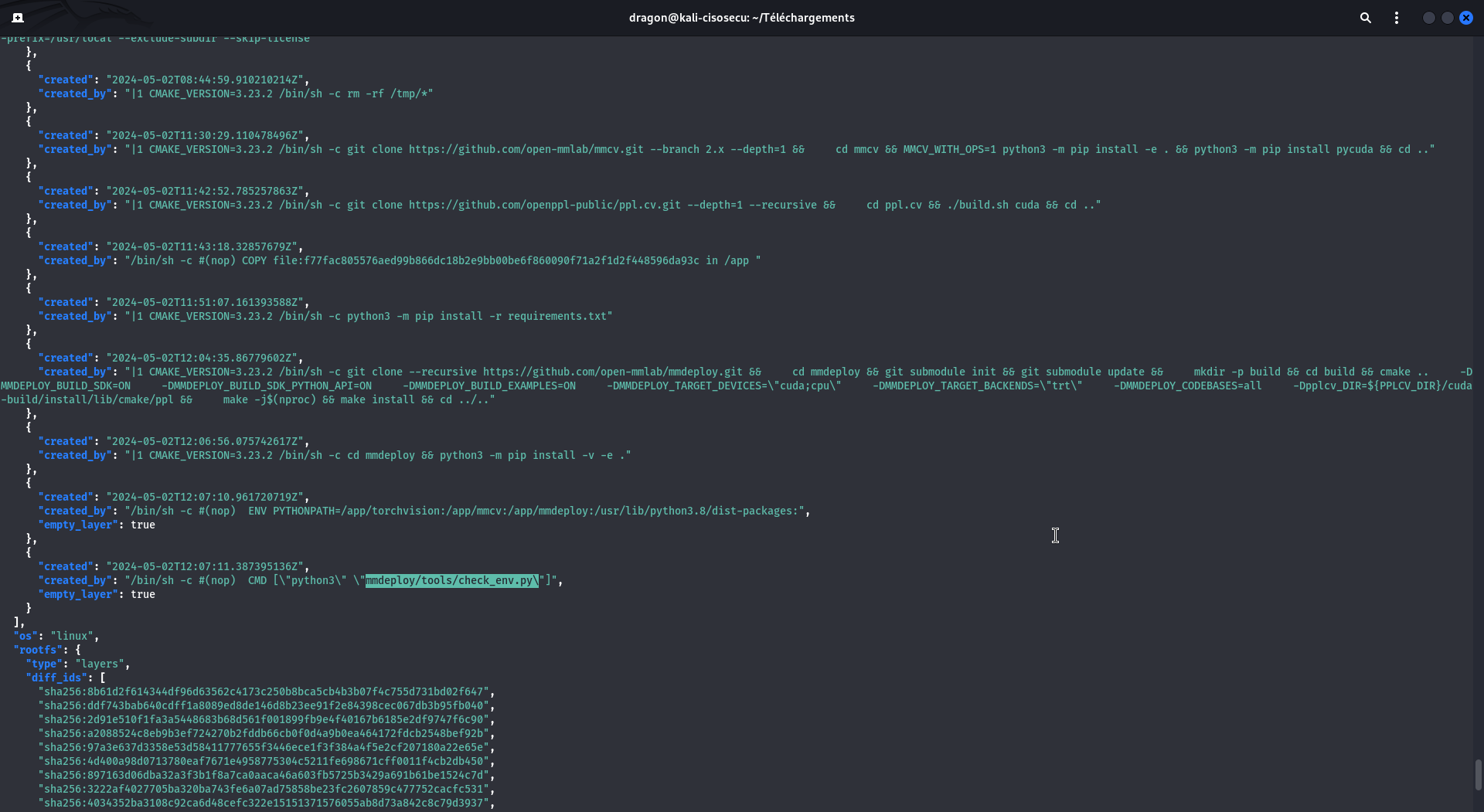

First, you should review the construction commands.

These are in the JSON file of the downloaded main directory, so a command like

cat scan/b38d20dc45df4b90927e70e299840c351a0abb759a9bf6788e683498a45b5dd6.json|jq should work.

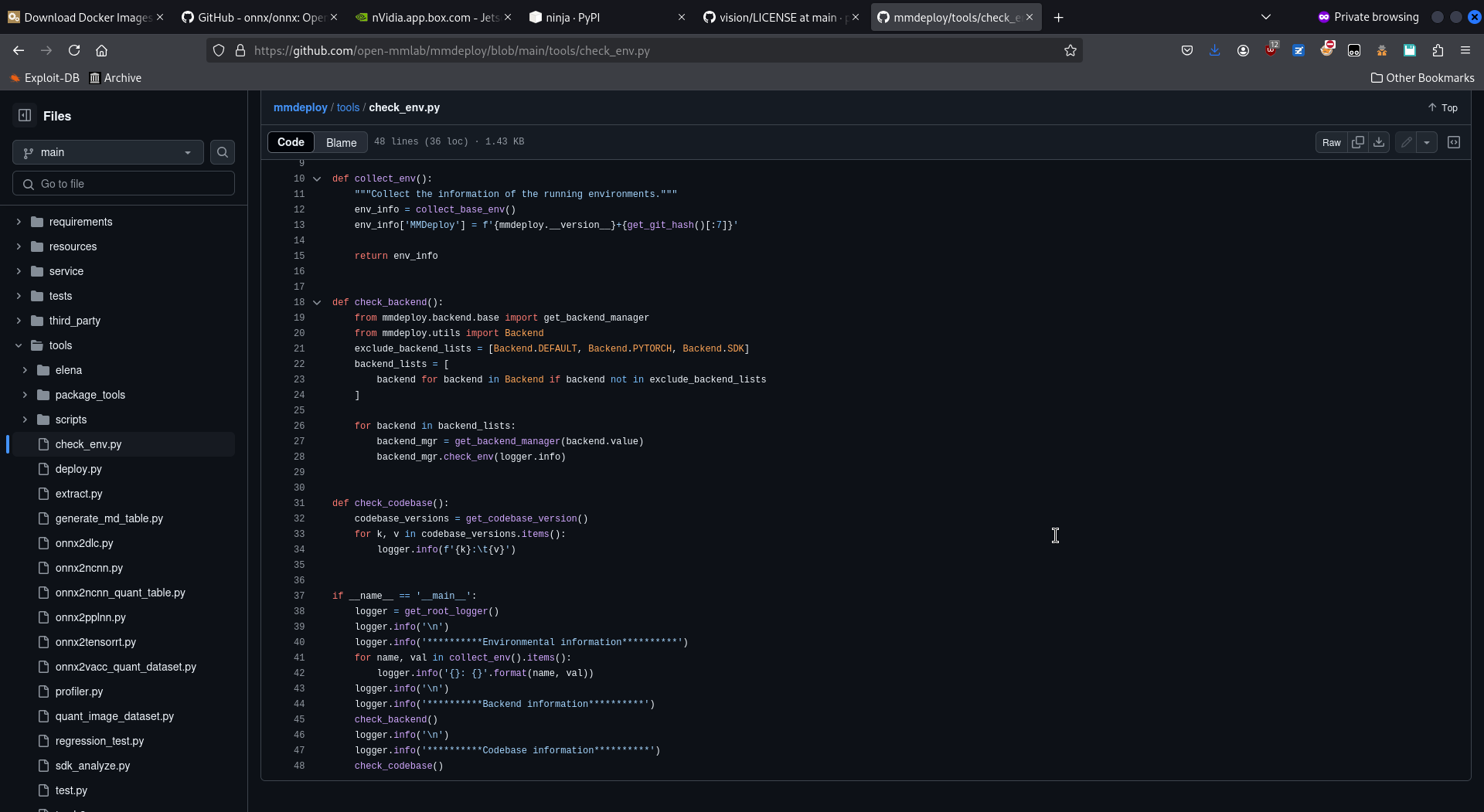

You also have the same construction commands in the dockerhub directly, in the tags tab then clicking the digest . But in these commands, you may have some FILE that you know nothing about, or some wget that are then run without you knowingthe downloaded-and-executed content.

Review the content

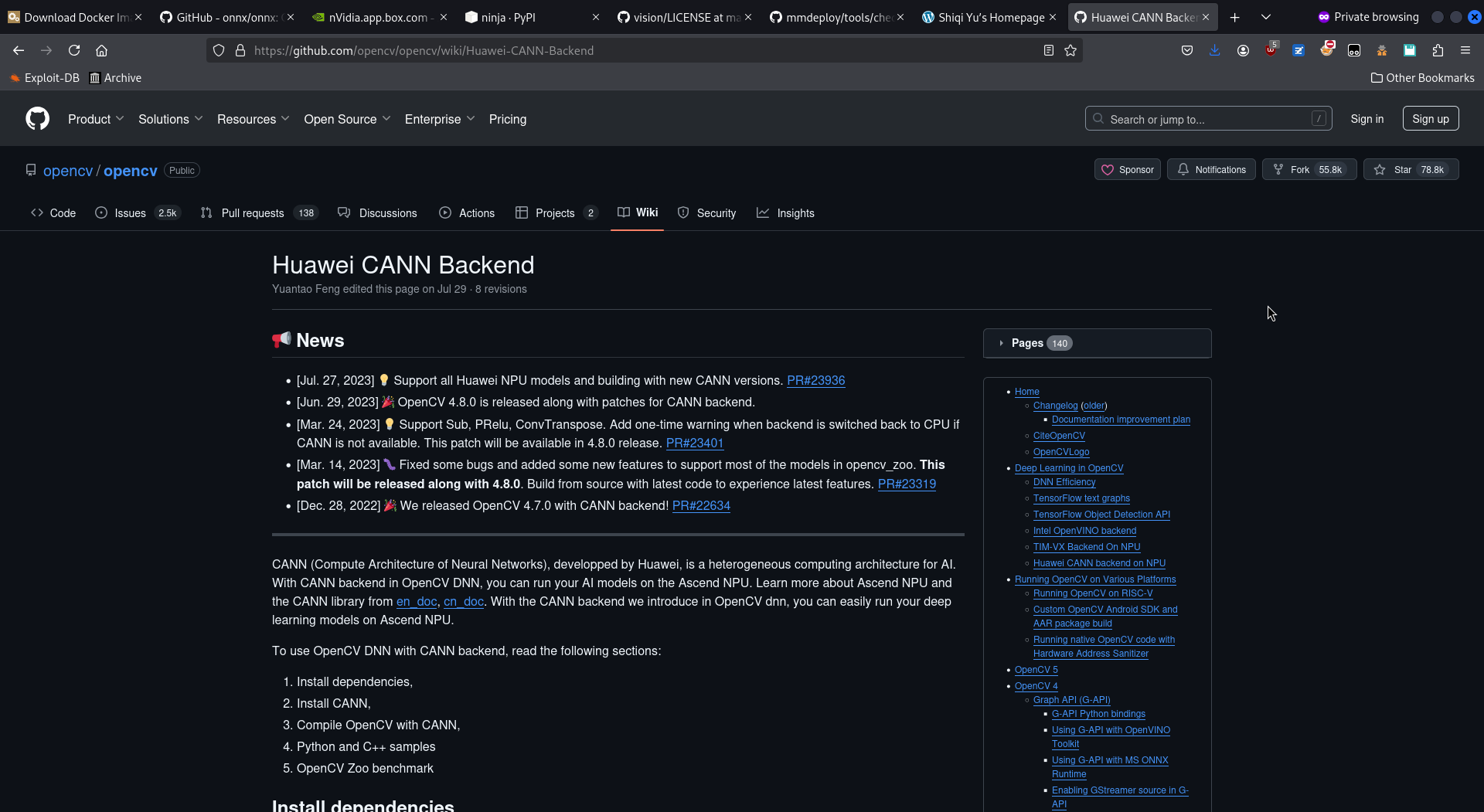

Since we now have the actual Docker layers content, we can start reviewing them for IOCs,

like searching for URLs/domains and seeing some surprising China-based ones.

A suggested simple command for that may be

grep -horaisE 'https?://[a-zA-Z0-9_.-]+'|sort -u.

find . -name 'LICENSE' (or a grep)

may quickly return some commercial-incompatible ones.

I may add other IOCs to look for in this article, later on. Stay tuned!

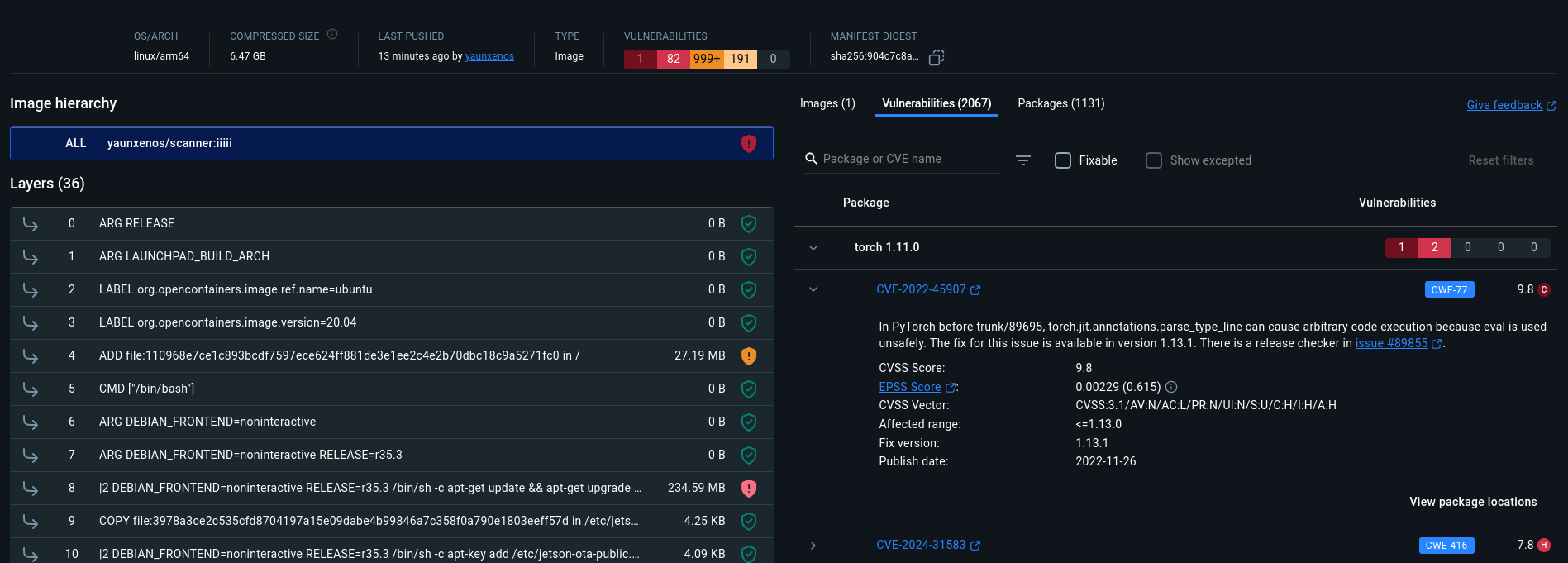

Review the CVEs

Now that we know this image seems "sane" and not a malware one, we may check if it has some CVE.

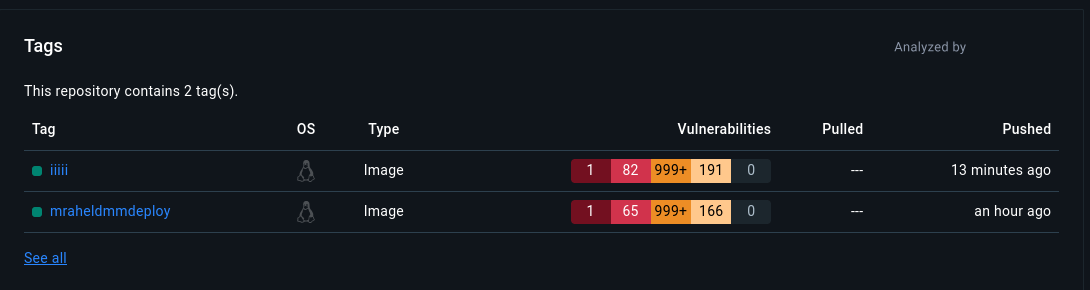

What's sad is that DockerHub does not allow for seeing the vulnerability report on an uploaded package,

unless you're the package's uploader. So the only way I found was to reupload the package.

Dumb, but working.

# We first install docker if not already installed

sudo apt show docker.io

sudo apt install docker.io

# Then pull the image

sudo docker pull mraheld/mmdeploy:v0.0.1

# Then we tag it with our username/repository_name:whatever

sudo docker image tag mraheld/mmdeploy:v0.0.1 yaunxenos/scanner:mraheldmmdeploy

# We need to log into the Dockerhub (--password is optional and will be prompted)

sudo docker login --username=yaunxenos --password=...

# And push the image

sudo docker push yaunxenos/scanner:mraheldmmdeploy

# For next images, it will be simpler as we will only need a pull, image tag, push

sudo docker pull iiiii/something

sudo docker image tag iiiii/something yaunxenos/scanner:iiiii

sudo docker push yaunxenos/scanner:iiiii

This is a one-shot solution. It's only meant to ensure no critical CVE exists on the image and may put its user at risk. A lifecycle solution is recommended for actual Docker image security, but it is out of scope for this short article.

I think it's a shame Docker Hub does not allow seeing the CVEs of existing packages. Making this more open would be far more efficient than reuploading images like I did.